The brain is a plastic organ constantly changing and adapting, creating new connections with the inclusion of novel thoughts and memories, and losing others as we age and decay. Two recent discoveries have further illuminated how and when these processes occur, challenging current theories on the processes of neurogenesis, cellular myelination, and neuronal pruning.

The majority of these neuro-developmental process takes place when we are young, the brain going through immense cortical growth in different regions of the brain as we mature and learn and require new skills and knowledge. This process occurs in waves throughout the brain, starting in the occipital and parietal lobes during childhood as we perfect sensory abilities, fine motor movement, coordination, and spatial awareness. Next the temporal lobe matures, improving our memory and language abilities. And finally the frontal cortex, responsible for our abilities to inhibit, control, plan, pay attention, and perform demanding cognitive tasks, becomes fully functional during adolescence. However, more cells and cell connections are created than are needed, and closely following this neurogenesis comes periods of pruning, when unused synapses or connections are weeded out and destroyed. This makes the brain more efficient, conserving space and cellular energy, and streamlining neural processes so only the essential and most important regions are utilized.

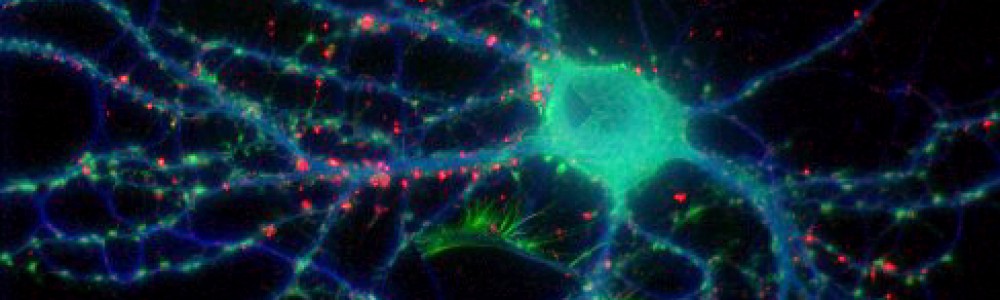

Until recently, the process by which this pruning occurred was a relative mystery. However, a study published last month in Science revealed that microglia–immune cells in the brain that identify and destroy invading microbes–may be involved in this process. Microglia travel through the brain and converge on areas of brain damage to clean up and dispose of leftover dead neurons and cell debris. They accomplish this via phagocytosis, the cellular process of engulfing solid particles similar to the process of autophagy I wrote about last week.

Scientists at the European Molecular Biology Laboratory in Monterotondo, Italy discovered that microglia also monitor synapses in the brain in a similar manner, consuming unused and un-needed connections during periods of brain maturation. Researchers used an electron microscope to look at microglia cells located near synapses in the brains of mice. These cells contained traces of both SNAP25, a presynaptic protein, as well as PSD95, a marker of postsynaptic excitatory activity. Both are an indication of cell interactions, suggesting that these microglia had consumed both presynaptic and postsynaptic parts of the connections. It is still unknown how these cells identify the proper synapses to engulf, though scientists believe it has to do with fractalkine, a large protein involved in the communication between neurons and microglia.

It was initially thought that this process of cortical maturation ended after adolescence, however, new evidence suggests that neural development, particularly in the prefrontal cortex, occurs well into one’s 30s. Studies published this year in PNAS and the Journal of Neuroscience indicate that both the addition of new white matter (myelinated connective neurons) as well as a decrease in gray matter (synaptic connections eliminated through pruning) continue into early adulthood. The implications of this discovery have to do with the timing of the onset of several psychiatric disorders, including schizophrenia and drug abuse, which commonly arise in the early to mid-20s. If the brain is still developing during this time, it suggests that we may be more vulnerable to environmental influences longer than suspected, or that problems with this period of final development could be at play in these disorders.